TeamViewer DEX Helps with

Ensures a smooth and uninterrupted digital experience for employees, minimizing frustrations and unnecessary disruptions.

Optimize processes with enhanced visibility and automation, driving proactive remediations, exceptional service, and reduced costs.

Resolve IT issues proactively and in real-time to reduce service desk incidents, minimize downtime, and maintain smooth, efficient operations.

Identify, notify, and fix compliance drift, digital friction, and end-user frustration issues.

TeamViewer DEX for

Lightweight, always-on communication, real-time actions, and automated client health remediation,

Optimize hardware spend while facilitating proactive hardware asset management

Gain clear insights into software usage and reclaim underused, redundant, or vulnerable software.

Loved by users

Core Capabilities

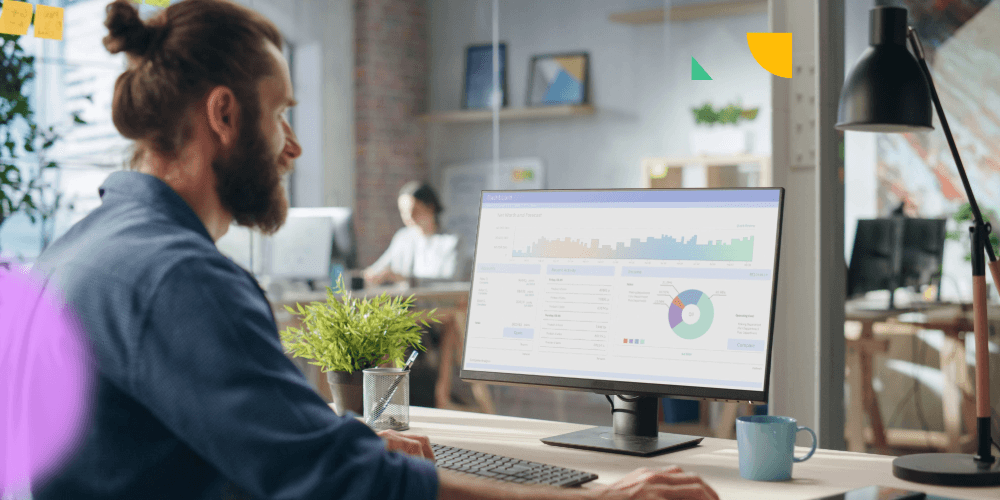

TeamViewer DEX Platform

Helps IT teams improve end user experience, tighten security, reduce costs, and evolve IT Operations from cost center to strategic enabler.

Dig into original DEX analysis, case studies, on-demand webinars, and more.

Be a DEX leader with useful strategies, how-to guides, and fresh platform updates.

Select your specific use cases to see demo videos narrated by product experts.

Understanding key terms and concepts related to Digital Employee Experience.

What is Digital Employee Experience (DEX)?

From the software and hardware used each day to IT interactions, DEX is the total of all digital touchpoints an employee encounters at work.

Customer Success

How Britain’s Favorite Retailer has transformed endpoint management across more than 1,000 stores with 1E

About 1E

At 1E, we reimagine how technology serves people and create new ways for IT to shape the future of work.